AI in Mortgage Lending: The Vendor Questionnaire Every Lender Needs

TL;DR

If a vendor wants to sell you “AI,” don’t start with the demo. Start with the rules.

In mortgage lending, you are responsible for what your vendors do with borrower data and how their tools influence decisions.

A strong AI vendor questionnaire should focus on four things: privacy, fairness, explainability, and consistency, because those are the areas regulators care about most.

But you don’t need to start with an exhaustive list of questions. This article will give you the top things to ask for to filter-out tech that will never meet your Compliance team’s requirements.

A Little Context...

“AI” is everywhere in mortgage tech right now.

Some tools talk to borrowers. Some summarize documents. Some flag conditions. Some recommend next steps. Some try to predict fallout, compliance risk, or likelihood to close.

A lot of that sounds harmless until you remember one simple truth:

In mortgage lending, small decisions stack up.

A message to a borrower. A document classification. A flagged exception. A suggested condition. A recommended product. A timing change in disclosures. A call script. A lead priority score.

None of those things may look like underwriting by themselves. But together, they can influence who gets help, who gets fast service, who gets a second look, and who gets denied.

The CFPB has been clear that lenders can’t hide behind “complex algorithms” when they take an adverse action (like denying a loan or offering worse terms). If your system can’t explain the real reasons, that’s a problem.

Also, the government has warned that “automated systems” are not an excuse for discrimination. If a tool creates unfair outcomes, the lender can still be held responsible.

So here’s the mindset shift:

Buying AI isn’t just buying software. It’s adding a decision-maker, or, at a minimum, a decision influencer, to your workflow.

And decision-makers must follow mortgage rules—every time, for every borrower.

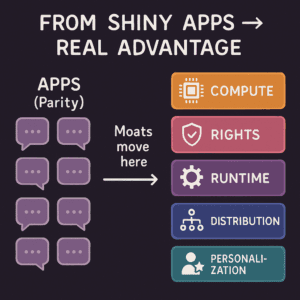

The Lending AI Landscape

Mortgage lending already has strict requirements. AI doesn’t replace those requirements. AI raises the standard, because it can scale mistakes fast.

When a lender uses AI anywhere in origination, regulators will expect the lender to prove four things:

1) Privacy

Borrower information must be protected. Financial institutions must follow privacy rules and explain information-sharing practices, including when data is shared with outside parties.

They also must protect customer information with a real security program, and they are responsible for making sure service providers protect it too.

2) Fairness

Lenders must not discriminate. If a model (meaning a system that makes predictions or recommendations) treats groups differently based on protected characteristics (like race, sex, age in certain situations, etc.), that can trigger serious fair lending risk.

Federal agencies have said they will enforce civil rights and consumer protection laws even when a company uses AI.

3) Explainability

Explainability means: you can tell a borrower and a regulator why something happened.

This is not optional. And this is a case where you need to show your work. The CFPB has emphasized that using AI does not reduce this duty.

4) Consistency

Consistency means: the system works the same way today as it did yesterday, and you can control changes.

Bank regulators have long expected “model risk management,” which is a fancy way of saying: test the tool, document it, monitor it, and govern it.

Now let’s translate those four ideas into a lender-friendly vendor questionnaire.

The Requirements

Before you ask a vendor about features, ask the “hard” questions. These are the ones that protect you in an exam, an audit, or a complaint investigation.

Privacy: “Where does borrower data go, and who can see it?”

In plain terms, your vendor should be able to explain and show:

- what borrower data the tool touches,

- whether any of that data is sent outside your environment,

- how it is protected while moving and while stored.

If a vendor says they “don’t know” where data goes, that’s not a small issue. If the vendor puts the onus back on you to “not send any borrower data”, that’s code for “that’s your problem, not mine.”

Privacy rules in financial services don’t just ask you to intend to protect data. They expect a real program, and they expect you to manage your vendors too. (Federal Trade Commission)

GLBA also expects clear privacy practices around information sharing.

A strong answer sounds like:

- “We encrypt data while it’s being sent and while it’s stored.” (Encryption means the data is scrambled so outsiders can’t read it.)

- “We don’t use public AI systems with borrower data.”

- “We keep sensitive information from being stored longer than needed and can show you our policies that enforce this.”

Those guardrails are also consistent with practical AI security guidance we see across mortgage automation teams: don’t persist sensitive info unless needed for compliance and encrypt data in transit and at rest.

As always, you should be asking to see both the procedure and the control that the vendor has in-place to enforce this.

Fairness: “Can this tool create a ‘digital redlining’ problem?”

Fair lending risk doesn’t only come from a rule that says “deny these borrowers.” It can come from proxy signals. A proxy signal is something that stands in for a protected trait. For example, ZIP code can sometimes act like a proxy for race due to historic patterns.

Regulators have made it clear they are watching automated systems for discrimination and bias.

Even outside mortgages, housing-related AI tools have drawn serious scrutiny for discriminatory outcomes, which is a warning sign for any lender thinking, “This tool is only operational.”

So you want to know:

- Does the vendor test outcomes across different groups?

- Do they monitor results after go-live?

- Do they have a process to fix problems and know that they are fixed?

Strong answer include:

- We have written fairness testing before go-live, with pass/fail criteria. The depth of our test plans will how seriously we treat this requirement, as does our commitment to thorough regression testing of all updates."

- "We perform ongoing monitoring after launch. We have a schedule for regular fairness reviews and can show you the triggers that are in-place to alert us when issues."

- "We can show you audit-ready logs of tests, versions, approvals, and outcomes.”

Explainability: “If this tool influences an outcome, can we explain clearly how it came to its conclusions?”

This is where many AI products fail.

Under ECOA/Reg B, if you deny credit or change terms in a way that hurts the borrower, you must provide specific reasons (not vague ones).

The CFPB has emphasized this expectation even when complex AI models are used.

So your vendor should be able to show:

- What factors drove the result,

- How those factors map to clear, human-readable reasons,

- How the lender can review and override (especially when regulators come knocking)

If the tool can’t explain itself, the lender ends up exposed.

Strong answers include…

- "We provide clear, borrower-ready explanations tied to the exact data/document/rule used."

- "Our outputs map to specific, compliant reason statements when outcomes worsen for a borrower."

- "We have specific guardrails are in-place to guard against “made-up” answers and can show how they are triggered and what happens when they are triggered."

- "We maintain a complete audit trail of recommendations, human decisions, and final outcomes that is access to our customers."

Consistency: “How do we control changes, and prove performance over time?”

AI tools can drift. Drift means the tool’s behavior changes over time because the world changes, data changes, or the model is updated.

Regulators expect lenders to manage model risk with validation (testing), documentation, and governance (clear ownership and controls).

So you need to know:

- When updates happen

- Who approves them

- how you measure whether the tool still works as expected.

Strong answers include…

- "We perform testing before each release and monitoring after release with defined thresholds."

- "Here are our predefined metrics that define how consistency is measured and proof that it is measured and monitored."

The Simplified Vendor AI Questionnaire

You don’t need 80 questions…at least not upfront. You need the right questions, asked the right way.

Here are the questions we’d use as a lender, written in plain language.

Privacy

- “What borrower data does your tool touch, and where does it travel?”

- “Do you send any borrower data to outside systems that we do not control?”

- “How do you protect data while it moves and while it is stored?”

- “How long do you keep borrower data, and how do you delete it?”

- “What do you require from your own subcontractors (your vendors)?

Fairness

- “How do you test that outcomes are fair across different borrower groups?”

- “What signals does the tool use that could act as a proxy for protected traits?”

- “If we find a fairness issue, what is your fix process and timeline?”

- “Can we turn off specific factors or rules if we think they create risk?”

Explainability

- “If your tool influences a credit outcome, can it produce specific reasons we can use in an adverse action notice?”

- “Can we see examples of explanations from real cases?”

- “Can a loan officer or underwriter understand the explanation as to how the AI came to its conclusion/recommendation without your engineering team?”

Consistency

- "How do you test the tool before release, and how do you monitor it after release?”

- “How often does it change, and how do we approve changes?”

- “How do you prevent different branches or users from getting different results for the same situation?”

- “What reports can we pull to prove the tool is working as expected over time?”

If you ask those questions and the vendor answers clearly, you’re talking to a serious partner. If they dodge, overpromise, or hide behind buzzwords, you’ve learned something valuable before you signed a contract.

Think About It

AI can absolutely help lenders move faster and lower costs. It can reduce “stare-and-compare” work, speed up document handling, and guide teams to exceptions instead of forcing them to touch every file. (That’s the good version of automation: let clean loans move, and route problems to the right people.)

But speed without guardrails creates a different kind of cost: complaints, cures, buybacks, and reputational damage.

So the goal isn’t “use AI.” The goal is:

Use AI in a way you can defend.

Defend to a borrower. Defend to an auditor. Defend to a regulator.

That starts with your questionnaire, not a fancy demonstration.

Form title

Share this

You May Also Like

These Related Stories

The Importance of Data in AI for Mortgage Lending: Unlocking a New World with Charlie

Reducing Unnecessary Wait Times in Mortgage Lending